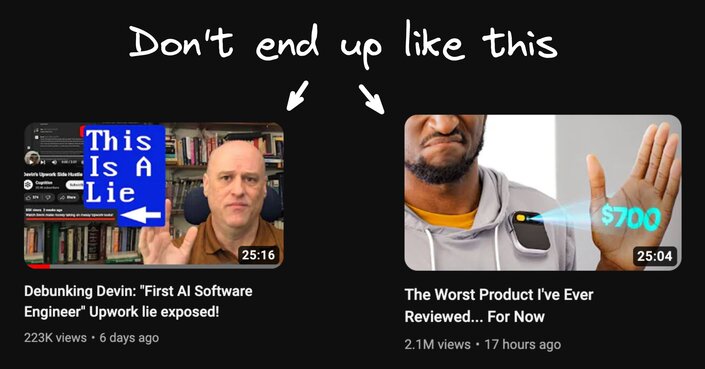

So Devin, the AI programmer is a lie. And the AI pin is the worst product Marques has ever reviewed.

AI product demos are faking it and flopping left and right. So how can you build an AI product that doesn't flop?

We've had great success lately building AI products that get actual users and make actual revenue.

Let me show you what worked (and didn't work) for us that could apply to building new products or even just new features within an existing company.

The first one, for the love of goodness, is don't just build a thin GPT wrapper. Build something real.

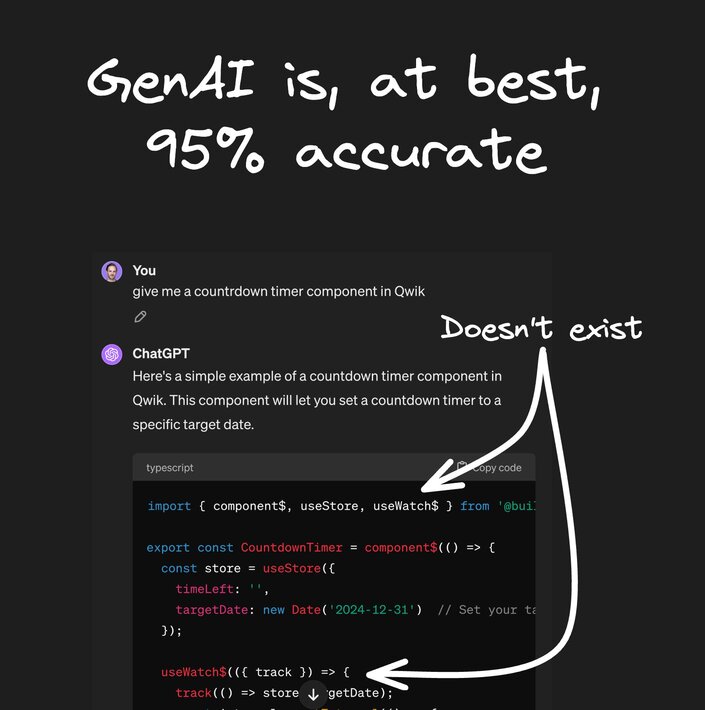

First, let's not forget — generative AI is, at best, 95% accurate. At worst, it's completely wrong and hallucinating.

But even worse than that, it's great at tricking you into thinking it's right when it's not.

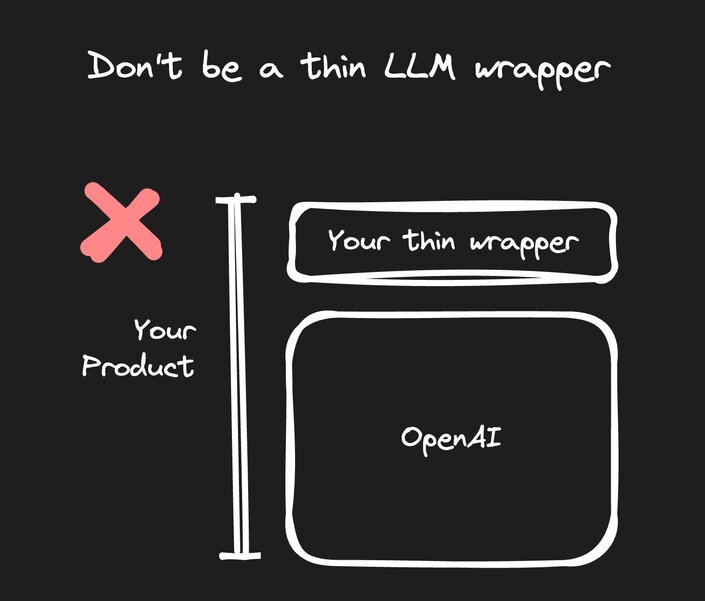

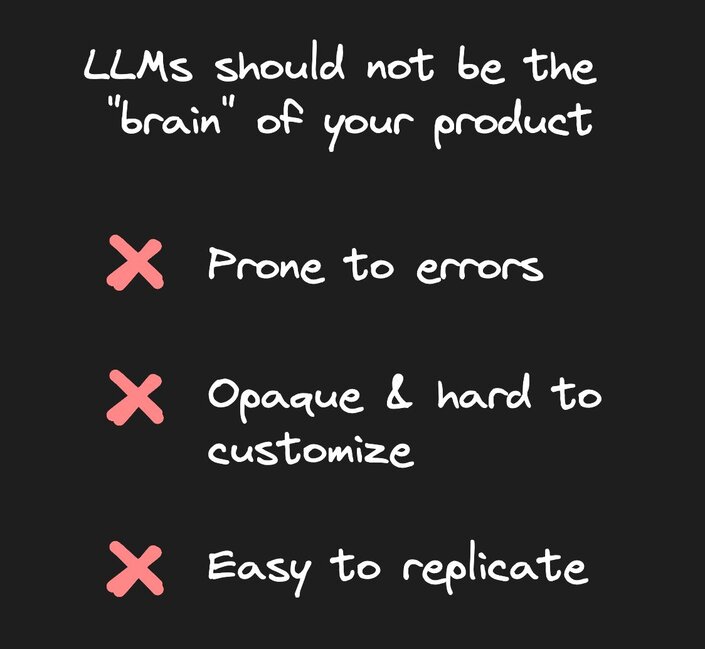

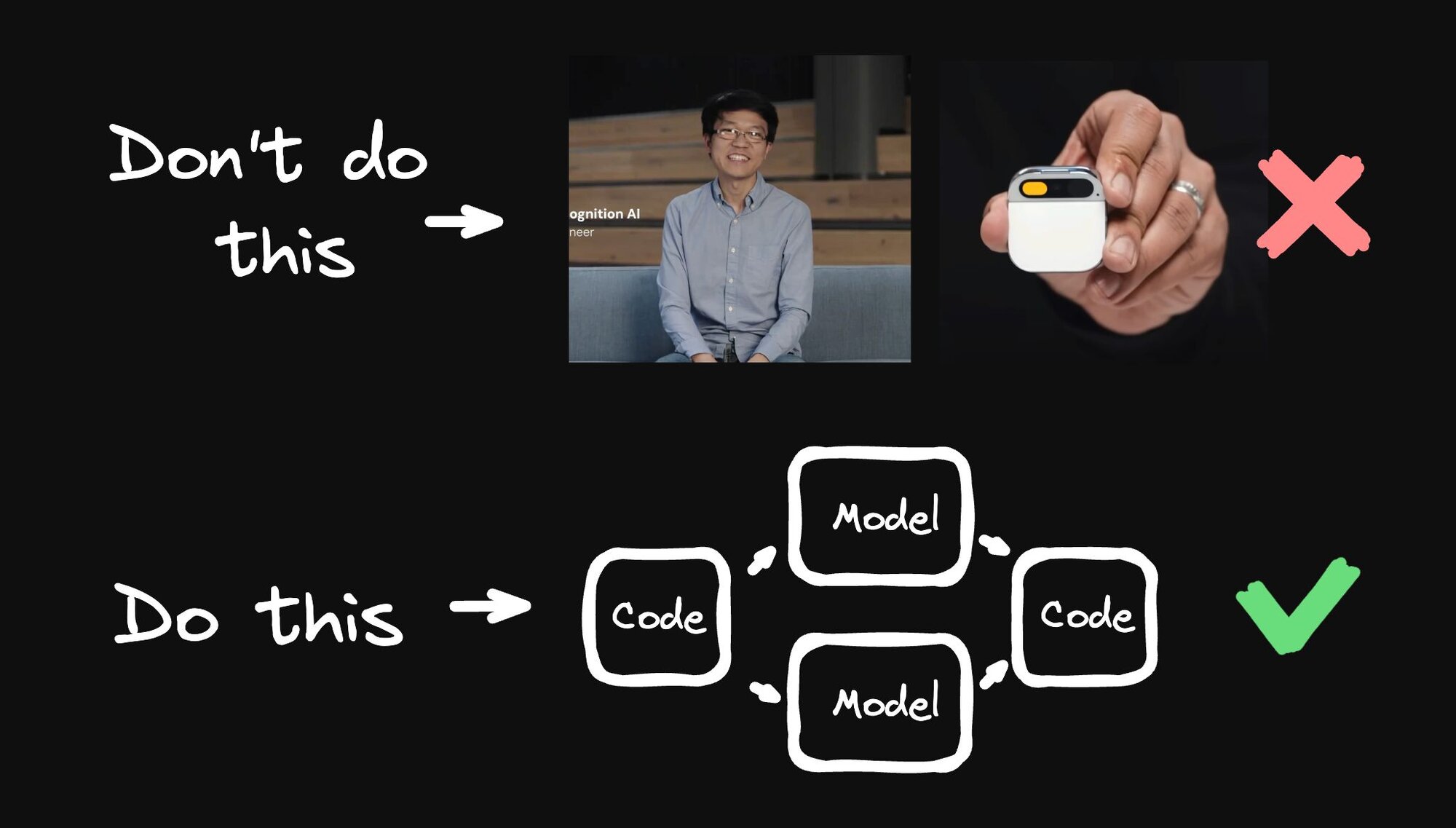

The anti-pattern to avoid is making an LLM the brains of your product.

If it's the core thing that's actually providing the value, and you just wrap some UI on top, you're in great danger of building something bad that's error-prone or too opaque and hard to customize.

Or if you're lucky enough to find something that works, anybody can easily compete with you, and you have no differentiation.

And OpenAI can release what you have as a feature anytime, if not OpenAI, then some other startup entirely.

Hint: Devin and the software in the AI pin are thin LLM wrappers. Don't be like them.

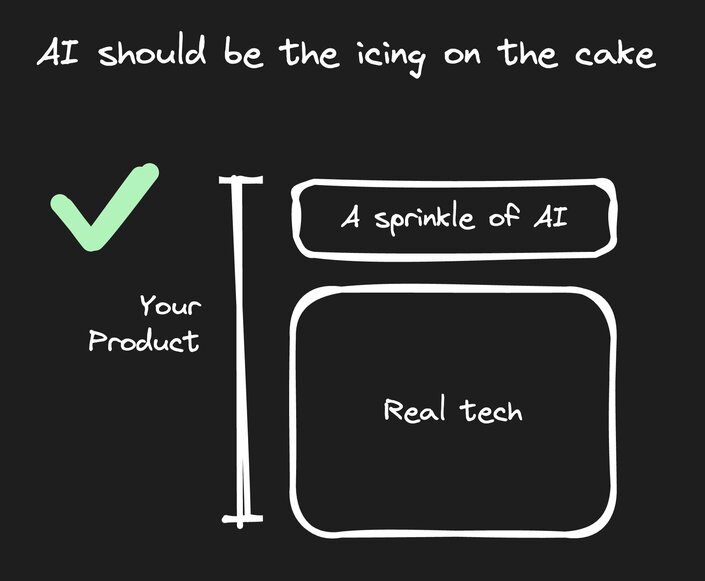

Instead, AI needs to be more like the icing on the cake — you built some real technology, and an AI becomes some essential glue. And if it fails, it doesn't make or break the product, but when it exceeds, it really adds value to your users in a unique and powerful way.

Let me stop talking in vague and abstract terms and let's give some real-world examples from our own learnings. For our use case, I want to dress code automation — as in, how can we use AI to make coding faster and easier?

By removing tedious and redundant work, developers can move faster and enjoy what they're doing. And if we do this well enough, we can set our goal to help people who aren't developers at all have some degree of coding superpowers.

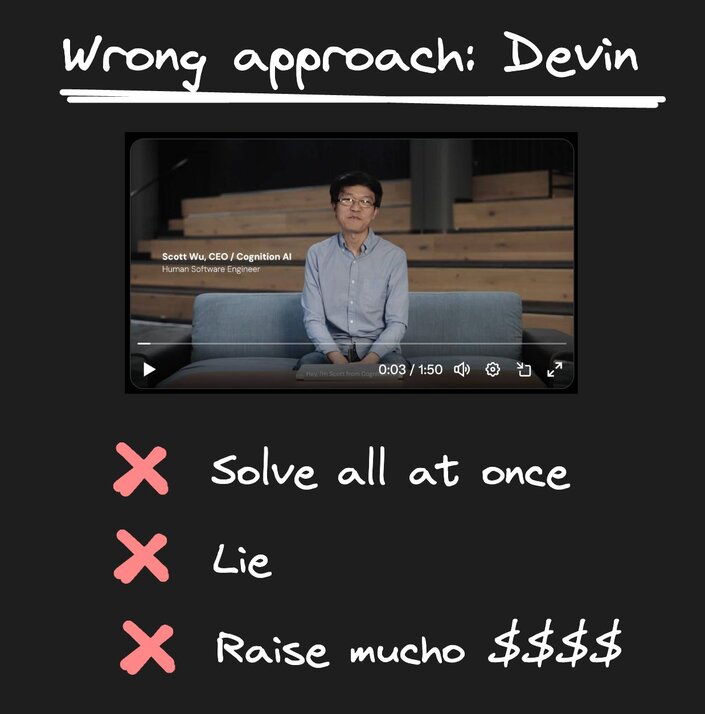

Let's start with the wrong way to solve this problem. I would not recommend doing what Devin did, where they're trying to act like they've solved everything at once — the AI can do everything programmers can do and be paid for it.

Giving very misleading information and demos that are full of lies, and using that to raise a ridiculous amount of VC money.

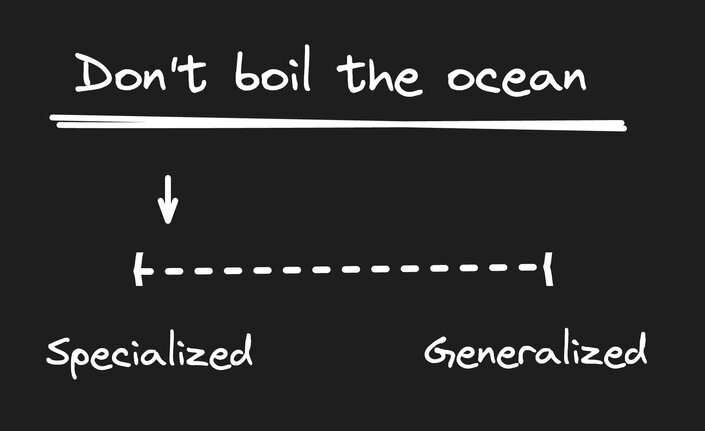

If we ignore the problems of lies and deceit, they're still making one fundamental problem — they're trying to boil the ocean. They're trying to make an everything AI. Everything products are pretty much never a good idea.

The better approach is to start specialized. Really nail some group of people's problem in a consistent and well-executed way.

And then incrementally expand your solution to be more generalized, to appeal to more people.

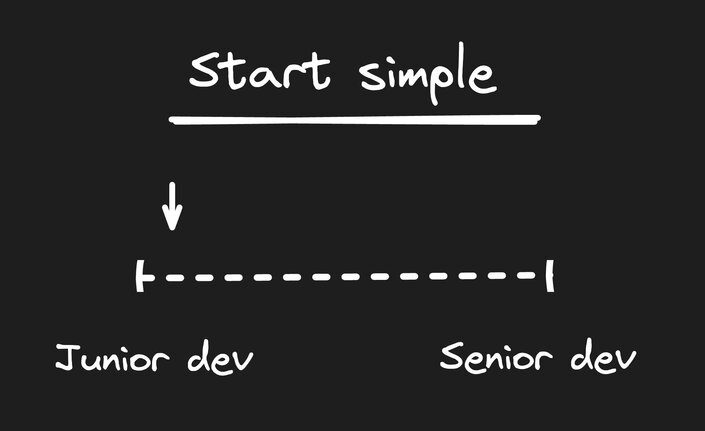

And for our case, when talking about automating development, we want to start simple.

Let's not start with the most advanced problems in computer science. Let's instead start with the tedious work that you normally will have a junior developer do.

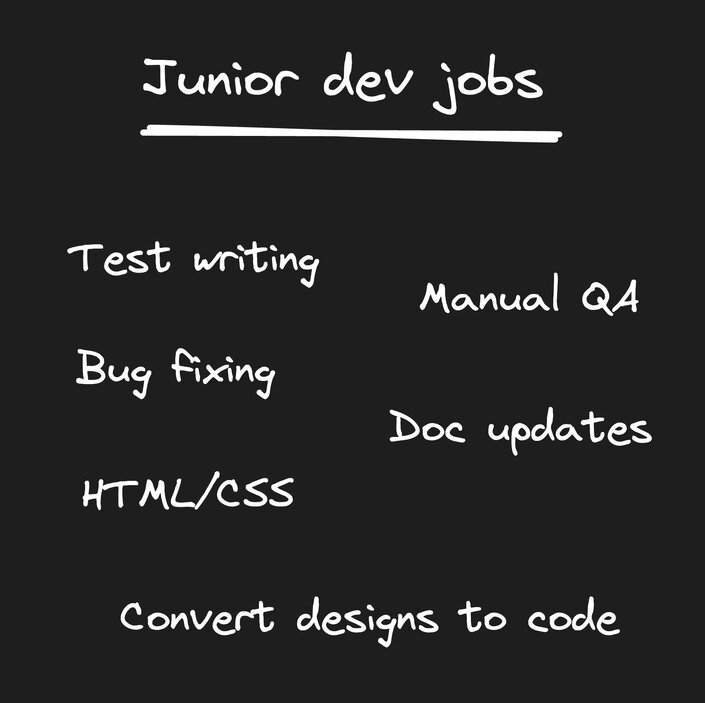

So let's make a list of what junior devs tend to do — writing tests, bug fixes, updating documentation, HTML and CSS, and turning designs into code.

This can give us a sense of what types of problems we might want to solve. But then the question is, where are you strong?

If you're an existing business, what does your product do? Or what customers do you serve?

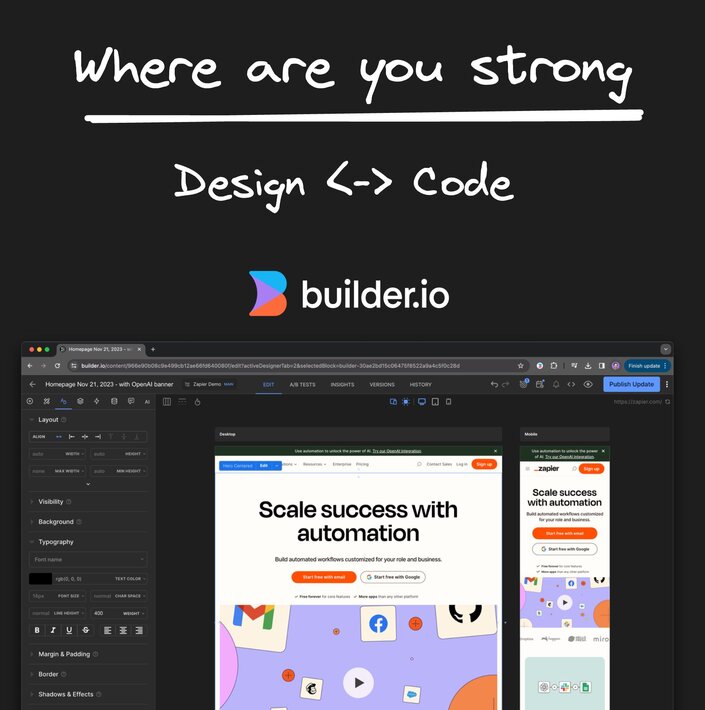

In our case with Builder.io, we already make a product where you can drag and drop with your React or other framework components, so people like marketers, designers, and product managers can create pages and other things within your existing apps.

And in our case, one of the most common things we noticed people doing is always reproducing Figma designs in Builder.io by hand.

Wouldn't it be so much nicer if they could just hit a button in Figma and pull the design into Builder.io where they can publish the update to the existing app via our API, or grab code they can copy and paste into the codebase?

But then the question becomes where to start. Because LLMs are not good at everything. In fact, they're bad at a lot of things. If not most things. And we don't want to build a product that only works sometimes, we need our product to work pretty much every time.

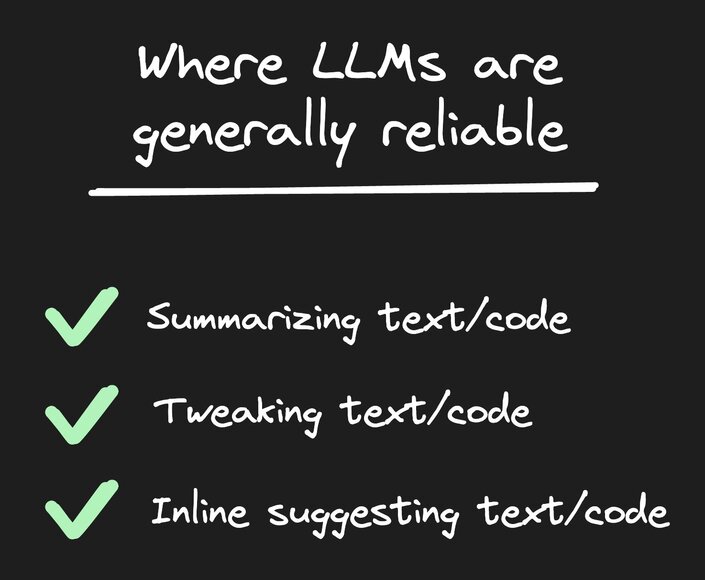

Here's a short list of things that I have found LLMs to be pretty reliable at — consistently being at least pretty good at these types of tasks.

You might say, oh, well you could fine-tune an LLM to fill in some gaps. In our experience, don't count on that. Fine-tuning doesn't help very much, and you definitely won't teach an LLM to be able to do or be good at something new it's not already pretty good at.

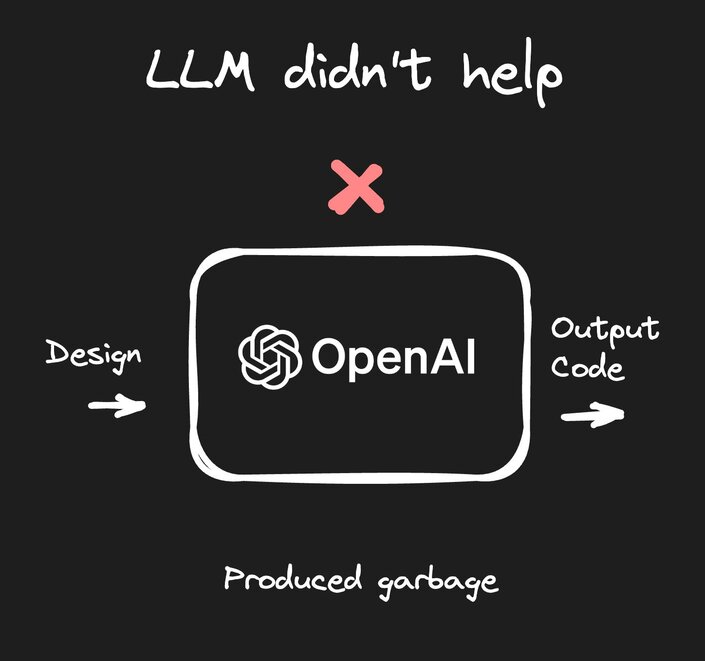

So we went about trying to find out how could we use an LLM to take a design and output code. And our results were utter garbage. It was very, very, very bad at this.

We tried every technique under the sun, from fine-tuning to prompt engineering to whatever else you'd imagine, and nothing worked even a little bit.

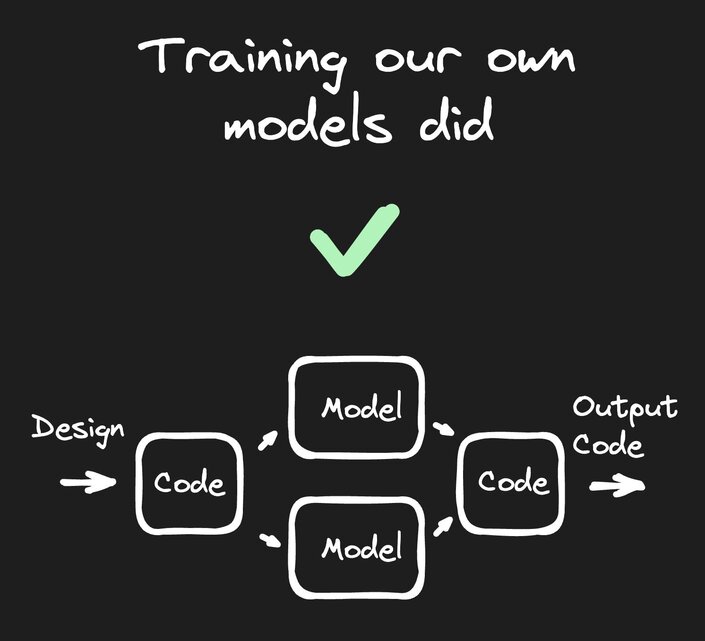

So, what we did instead is we trained our own models.

We started by trying to solve the problem with no AI at all, by finding out how far pure code could get us, and when we found specific problems that were very hard to code by hand, we started training specialized models geared exactly at that problem.

For more depth on that topic, I have two detailed posts on this already: One about our overall approach to AI, and how we learned not to use LLMs for everything and train our own models, and another one on how exactly to train your own models.

In many cases, it's not nearly as hard as it might seem. Now in our case, we could turn designs into output code very reliably, very quickly, very cheaply, and we could customize anything based on any feedback we got.

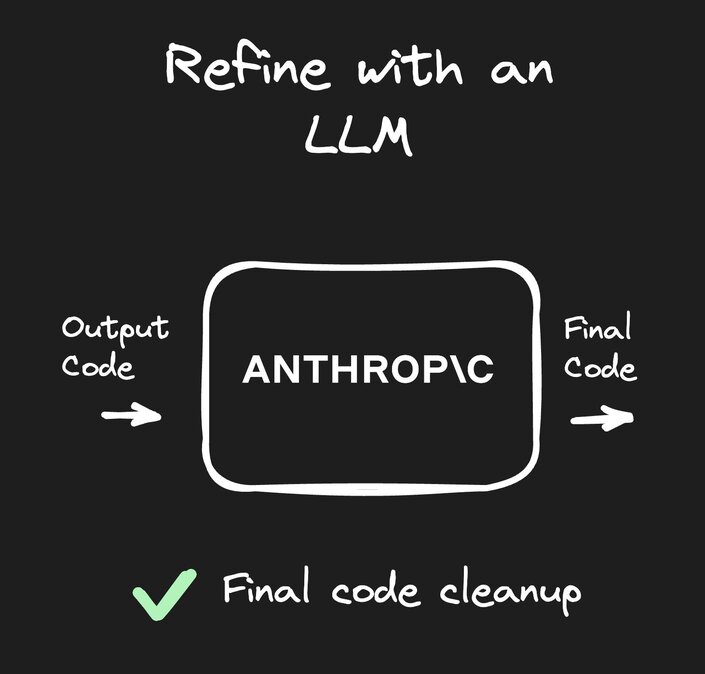

We only had one remaining challenge that was hard to train our own models for, which was taking our default output code and cleaning it up to be better named, better structured, and generally refactored.

But if you remember, tweaking and modifying code is something LLMs are actually pretty good at. And so this became our final step — taking the output code we generated with our own code and models, and then cleaning it up.

When at this step, we tried tons of LLMs, and for our use case, Anthropic was the best by a pretty good margin.

And this landed us with a pretty robust toolchain — we had coded our own models and used an LLM for the icing on top, and the whole system worked quite well.

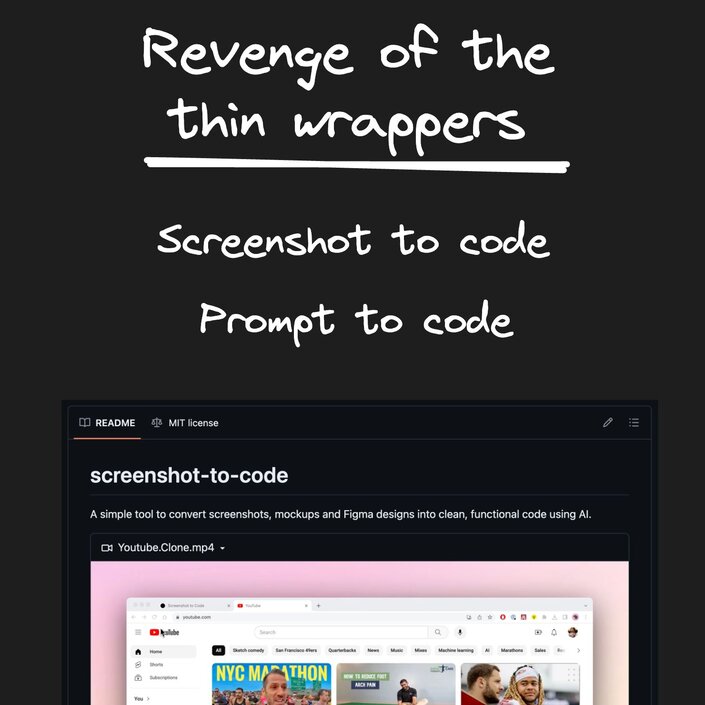

But then, funny enough, soon after multimodal LLMs came out, a new wave of thin wrappers was released. People rapping GPT 4 vision to upload a screenshot and get cut out the other side.

And these at first glance actually looked pretty good. Sometimes the output went haywire and sometimes it was not bad. But they were all terrible in one key way.

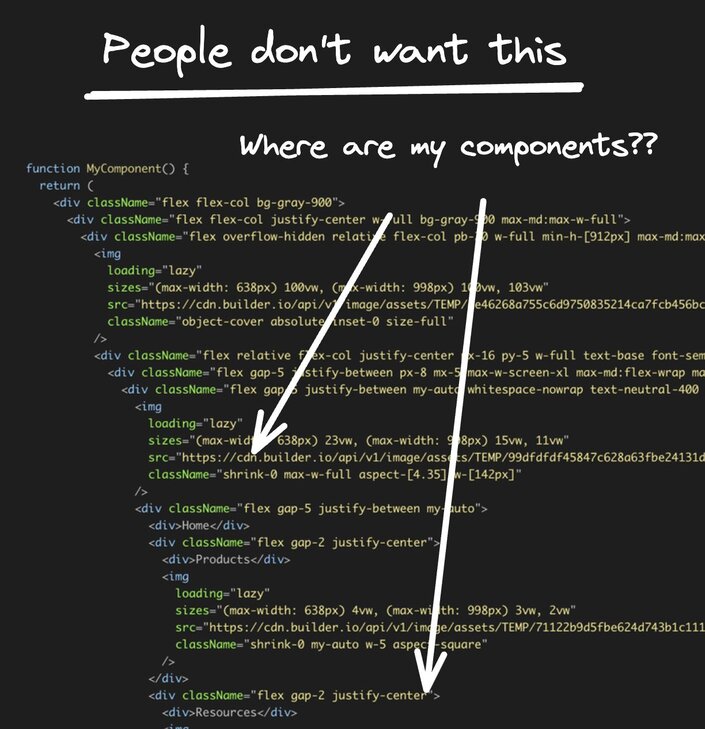

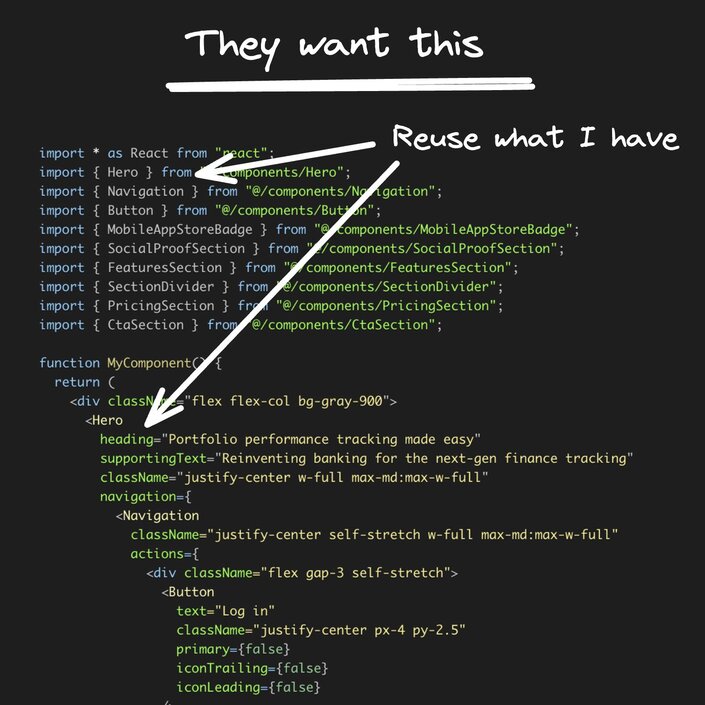

And this is why you have to remember to always get customer feedback. We put our AI toolchain in people's hands, and we learned one critical thing that people need that no LLM wrapper is going to easily be able to do. People don't want generic output code.

They don't want every design or screenshot to generate new code every time. That's kind of ridiculous.

Even if the code were high quality, if every time you took a design and made new code and never reused existing components or existing code, your codebase would get so bloated and unmanageable so fast.

And every one of these tools operated that way, including ours.

Tools like these need to understand your existing components, your existing design systems, the libraries you use, and generate code like what you would actually write.

Importing those components and using them just like you. Anything else is really just a cool demo and not that useful.

So the question comes up, how do you teach an LLM about your design system? And more importantly, we don't want an LLM to just guess at our design system. We need true determinism here.

We need to make sure when a design is designed one way, it always turns into components in your code in a very specific way. We don't want random, unexpected outputs throughout the system. So, how do you make an LLM deterministic?

And the answer is...you don't.

And thank goodness we were not a thin LLM wrapper. Remember, you don't want the LLM to be the brains of your product. Because when you need to add some new feature that LLMs aren't good at, you're left without options.

But in our case, our models can understand these. We built the tooling to scan and find the components in your code, map them to components you have in Figma, and give you deterministic mapping functions that we generate and you can edit.

So we added new models to the chain and made a deterministic system to turn designs using design systems into code exactly as you would have written.

Well, at least close enough that with a few edits, you're pretty much done.

And while the thin wrappers can continue to bang their heads against trying to figure out how to offer a similar feature, we can keep taking new feedback and improving our product at a much faster rate than they can.

Bill Gates has a very good quote about this — that we always overestimate the change that will occur in the next two years, and underestimate the change that will occur in the next 10.

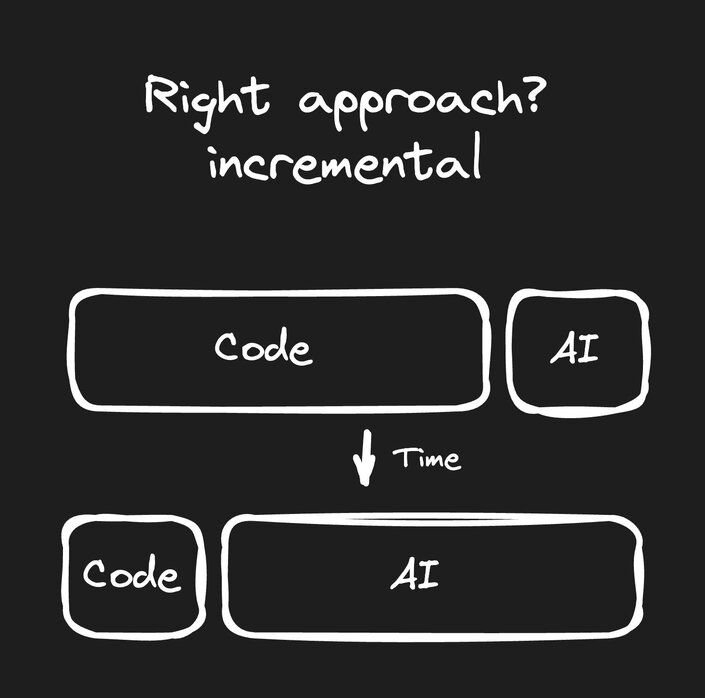

AI is phenomenal technology, but LLMs are not going to solve all of your problems tomorrow. What you need is an incremental approach.

Today you need mostly code and then add AI for specialized problems. Over time, you can continue to add more AI as needed, and maybe in 10 years, your product will be mostly AI.

But you'll find success doing it. You'll get real customers who pay you real money, and you'll be sure you're solving real problems, while other people try to boil the ocean and ultimately fail.

And hey, don't take my word for any of this. Unlike Devin, we ship our products for everyone to try before we make claims that we've changed the world.

You can try out our product and let me know if you think it's a flop, like the AI pin, or actually speeds up your workflow as expected. Tweet at me your feedback anytime, I try to reply to everyone.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo