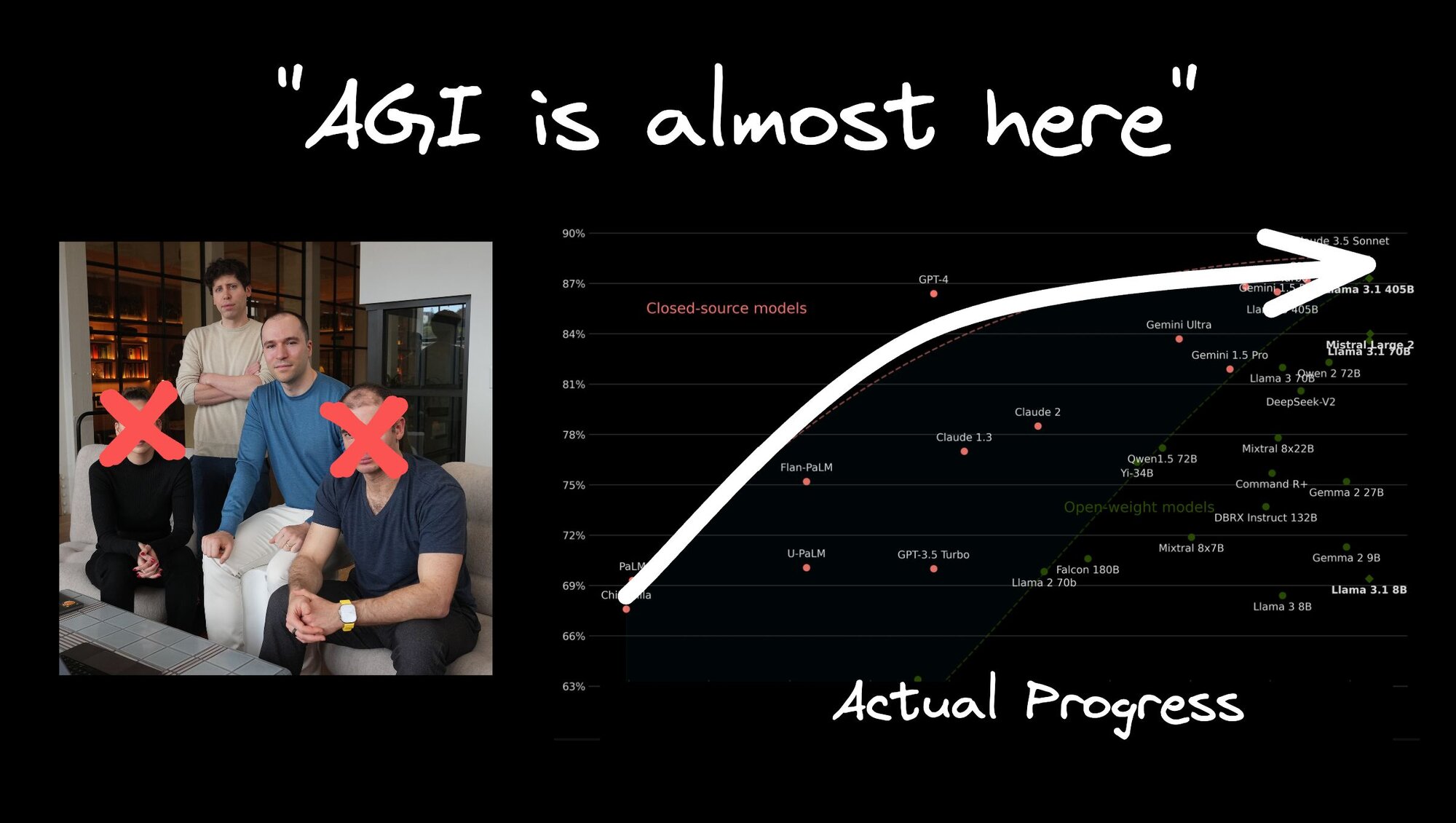

OpenAI and other big AI vendors keep pumping one huge lie, even though all the data suggests they're definitely wrong. Sam Altman loves to preach that Artificial General Intelligence (AGI) is right around the corner.

"We're about to give you AGI," he claims, even despite key leadership roles leaving the company. In my opinion, if you really were right around the corner from AGI, I wouldn't be leaving.

At the same time, their latest model just looks like GPT-4 with a chain of thought prompt, which is already a common technique, and just a really long output window (not to mention how incredibly slow it is).

The truth about AI model progress

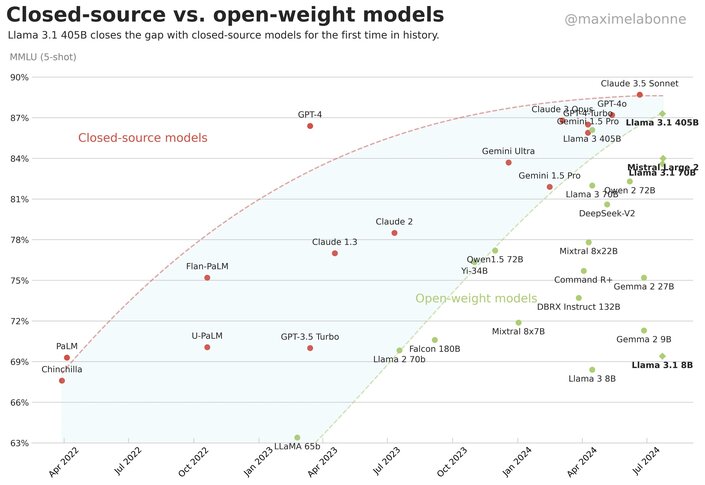

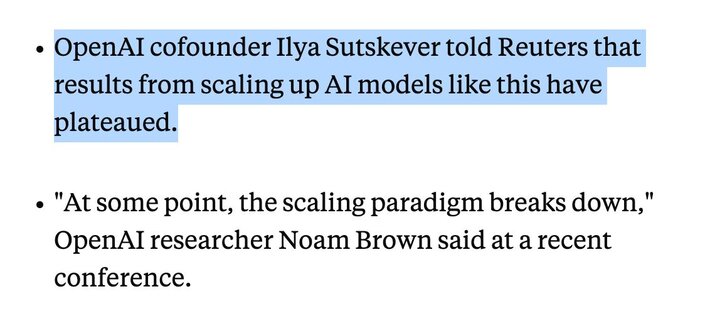

It seems like OpenAI is noticing the truth here, which is that new models are plateauing in terms of how effective they are compared to older ones.

So instead of shipping a GPT-5 that's probably only marginally better and probably larger and more costly, they're trying to make GPT-4 work better for different use cases.

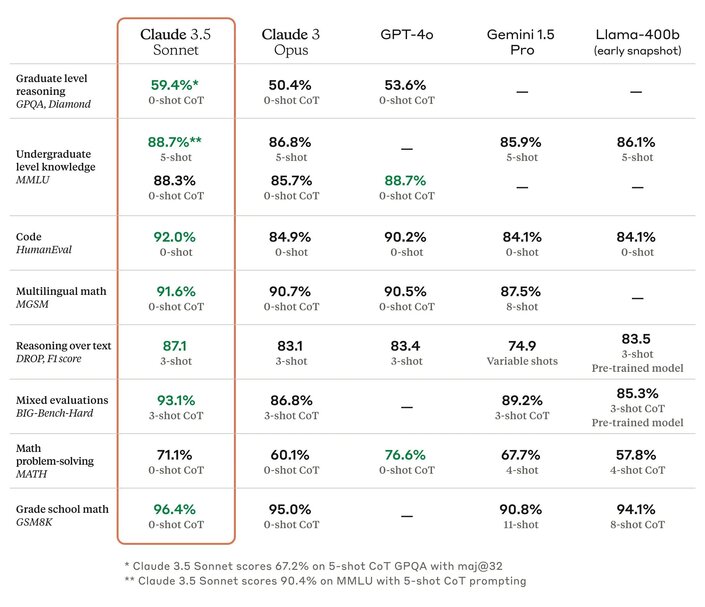

At the same time, Anthropic consistently produces better models. But even those are only incrementally better each time they launch one. This is a big gap from the GPT-2 to 3 to 3.5 days, where we saw huge leaps each time. We're just not seeing that anymore.

Source: Business Insider

Three interesting implications for the AI ecosystem

This leads to three really interesting implications of where the AI ecosystem is going, and one really big unknown that I also think people are really, really lying about.

1. Vendors are specializing

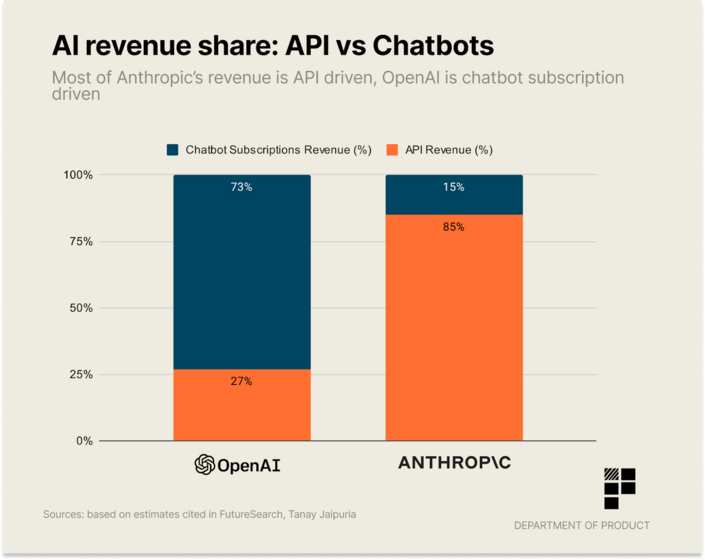

OpenAI's Sam Altman used to tout "good luck" if you try and compete with their models, but they simply don't have the best models anymore, and haven't for a while. It seems they're acknowledging this and keep focusing time on specializing in consumer products built around the models.

They make three-quarters of their revenue from ChatGPT, which they're investing in better native apps, better experience, better search — all the consumer experience around the model. They're conceding that they just don't build the best models themselves anymore.

In fact, if you're a vendor using OpenAI's APIs, in almost all cases you probably should use Anthropic, which is reflected in their revenue — 85% of their revenue comes from the API.

They invest far less in their consumer products, even though the model is better. The user experience of the products just isn't as good. They are clearly not investing there. They're putting everything into just being a few percent better of a model.

Source: Anthropic

2. Specialized hardware is coming

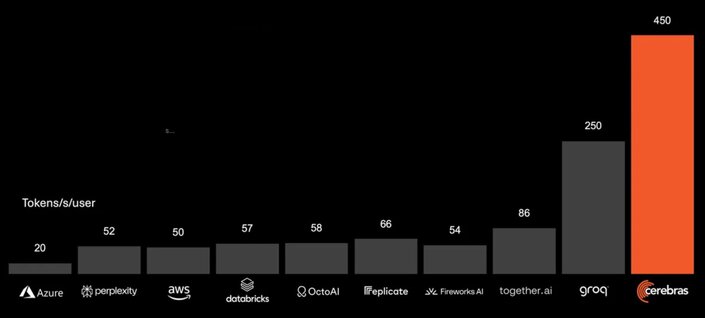

More and more vendors are building specialized hardware for LLMs which can drastically increase the speed of inference.

New chip companies like Groq and Cerebras are able to do LLM inference three to five times faster than typical GPUs.

Source: Cerebras (evaluation of Llama 3.1 - 8B)

The interesting key here is they're not just five times faster. The can also evaluate three to five times cheaper because they're simply much more efficient.

So when we can make AI products that are equally as smart but massively faster and cheaper, that could unlock a lot of new use cases that were previously just prohibitively slow and expensive.

3. Better utilization of LLMs is coming

The third implication is that vendors are going to get a lot better at figuring out how to use LLMs, even if they don't get that much smarter.

Below is an example of a launch title for the Xbox 360 - Perfect Dark Zero, which came out when the 360 was brand new and the graphics are…fine. Like, it's representative of that era of the console.

But what's fascinating is when you remember launch titles versus late in a console series titles. On the same exact foundation, there was no change to the console or hardware itself, but years later we see games like this.

This is Halo 4, and this looks like a whole different generation of console. Yet, this is on the same hardware as the prior game.

What happened over those years? Developers got way better at optimizing how they use this technology. Game engines found more and more clever approaches to get the most out of what they had.

This is what I think will happen with LLMs. Those who make products on top of LLMs will get better and better at getting more of the smart parts out of them and avoiding the suboptimal, problematic parts.

I don't think we're anywhere close to AGI, but I do think we'll see major leaps in product quality, just by using the LLM technology better and better.

The reality of AI agents

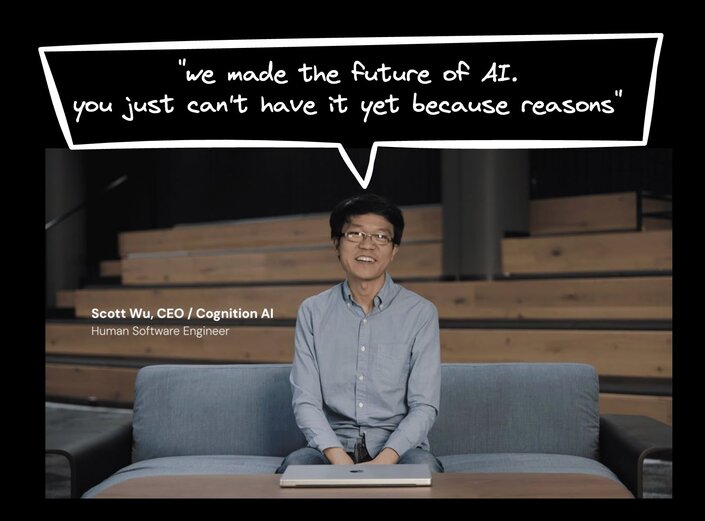

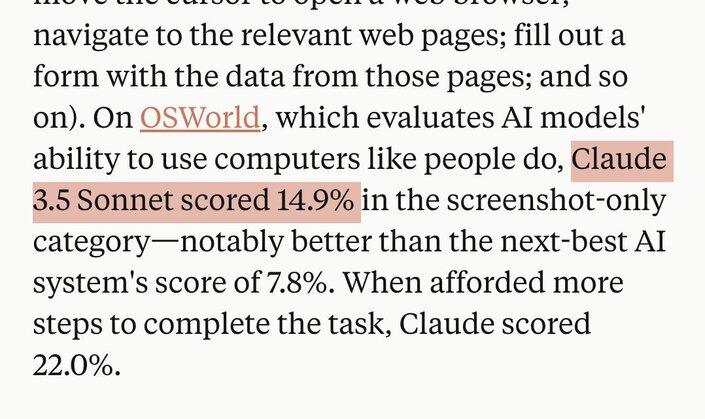

What about agents? What about Devin, the AI software engineer that made all these crazy claims, raised a ton of VC money, and just kind of disappeared off the radar? Or Anthropic's Claude with computer use that will navigate your computer and use it like you?

Realistically, I think the models are just not smart enough yet for this to make sense. Even in Anthropic's own announcement post, they cited that Claude with computer use only has a 14.9% success rate. I don't see any world where we give AI agents the ability to act on our behalf without them having something more like a 99— if not 100% — success rate.

Source: Anthropic

In all of my experience and testing, the models are not smart enough to be able to accomplish autonomous tasks. They need a human in the loop. Otherwise, they derail quickly and those small errors compound very rapidly.

The future of AI products

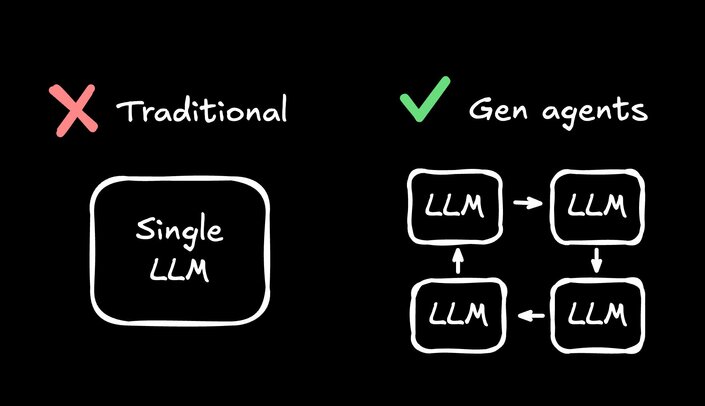

But that's not to say that some form of agents won't exist in the short term. I think mini or micro agents do show a lot of practical value. For example, the open-source Micro Agent project that can generate tests, take a set of tests, and write and iterate on code until the tests pass.

Or in the case of Builder.io and our product that uses AI to turn Figma designs into code, we've actually found that rather than using one big traditional LLM, we can use a series of smaller agents that communicate with each other to have a way higher quality output when you convert that Figma design into code.

By using a series of smaller agents, in combination with self-trained models, we found that you can both increase reliability, speed, and accuracy, compared to just plugging in something off the shelf.

These are the types of techniques that I think will bring us to the next generation of our era. Even if the LLMs don't get smarter and we don't have personal coding assistants doing all our work tomorrow, we sure as heck will keep getting better and better products that are more reliable.

We’ll automate tedious work — like converting designs to code, using generative AI to produce designs and functionality, adding functionality to your designs, making them real-world applications — or develop other kinds of interesting use cases around content generation, code generation, co-pilots, and other interesting products Builder.io keeps producing.

Just be sure to pay a keen attention to which ones people are actually using, versus what are gathering hype but actually don't work. Both will keep happening. I'm certain of that. I don't think we're anywhere near being past the hype curve of BS products intermixed with real effective products.So while I think there'll be a lot of noise in the market, I think we have a lot to look forward to as well.

Design and code in one platform

Builder.io visually edits code, uses your design system, and sends pull requests.

Design and code in one platform

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo