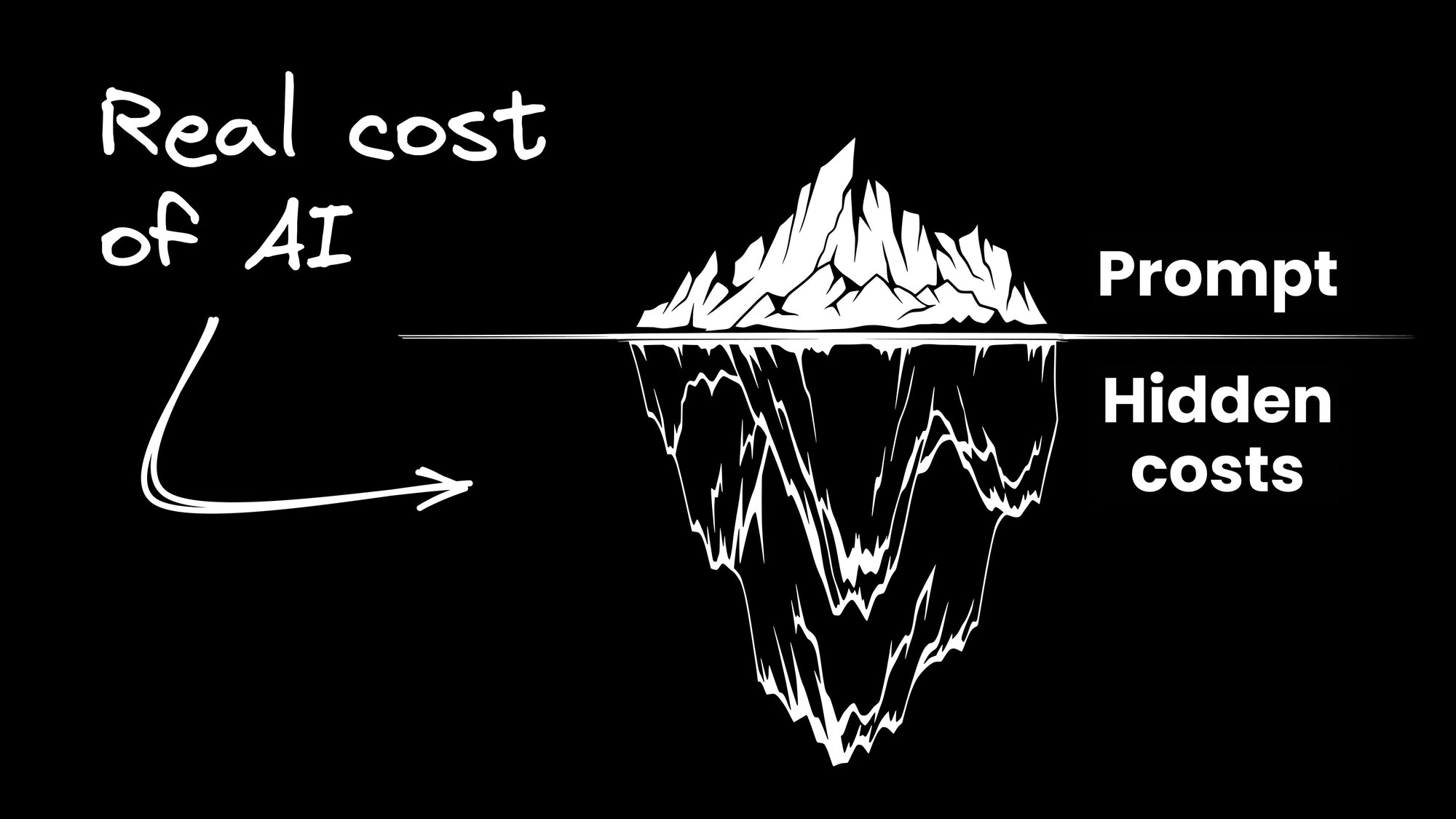

"Unlimited AI for $20 a month" is one of the most compelling (and misleading) pitches in software right now.

It's compelling because people want it to be true. It's misleading because it hides how AI actually works under the hood.

But here's the truth: every AI interaction costs money. Sometimes a lot of money. And when tools pretend otherwise, they're either hiding the math or gambling on your apathy. Neither approach scales.

If you're building or buying AI-powered products, pricing isn't just a detail. It's a product decision. It determines whether your best-case scenario, a customer who loves and uses your product constantly, turns into your worst-case scenario, a customer who costs you more than you earn.

Here's how I think about AI pricing, what we've learned building Builder, and how teams can avoid getting burned.

LLMs charge by token. That's not new. But what people miss is how unpredictable that usage becomes.

It's not just "prompt in, response out." It's: what model are you using? How long is the input? How many outputs? How many times per day? Multiply that across users and teams, and suddenly the cost structure looks more like a cloud compute bill than a SaaS invoice.

That's fine if you're the buyer and understand what's happening. But for most customers, especially at scale, that kind of unpredictability is a dealbreaker.

We've had to wrestle with this directly. In one of our largest deals, usage-based pricing almost killed the entire agreement. Their procurement team needed to forecast spend, and we couldn't tell them what their AI usage would look like. Nobody can.

This is the paradox. Your most successful customers use your product the most. And in AI, that means they also cost you the most. If your pricing isn't built to handle that, your growth will break you.

Token-based pricing? Too abstract. Customers can't do the math.

Per-message pricing? Sounds simple. But it punishes tiny edits the same as huge requests. We saw users gaming it, bundling prompts into mega-asks just to save credits. That led to bad outcomes and worse UX.

We even let some customers plug in their own LLM API keys. Not one of them used it in production at scale. Theoretically interesting. In practice? Too annoying.

After testing everything, the best model we've found is simple. Pass through the LLM cost, plus a modest markup. Think 20 to 25 percent. Predictable, fair, sustainable.

When I'm evaluating AI tools, here's what makes me walk away.

Over-abstracted cost models. If I can't trace what I'm paying for, I assume I'm getting fleeced.

Cheaper than the model itself. That's not a product. That's a gym membership. You're banking on me not using it.

Huge markups on thin wrappers. If your app is just calling ChatGPT with a little UI, I can build that myself. And probably will.

I'm fine paying for value. But the value better be clear. If I'm using Claude Opus, tell me. If you're subbing in an open-source model and charging me like it's GPT-4.5, that's a problem.

In theory, pricing based on results is perfect. In practice, nobody can measure outcomes accurately.

I've seen companies try to price based on developer productivity, ticket resolution, feature output - you name it. But the metrics are always noisy, and the math never holds up. Even companies that tried it have mostly backed away.

Maybe it'll work once model costs drop further. But today, in a market where margins are thin and demand is high, cost-aligned pricing is the only thing that scales.

Three things.

- Predictability. Nobody wants a surprise bill.

- Transparency. Don't hide the model. Don't fake the value.

- Fairness. If I use more, I'll pay more. But the math should make sense.

That's it. Not clever bundling. Not gamified credit packs. Just honest, sustainable pricing that lets people feel in control.

As models get cheaper, and they will, this whole landscape will shift. Over time, more AI tools will be able to absorb costs or offer flexible tiered pricing that still works at scale.

But we're not there yet. Right now, if you're building AI into your product or buying it from someone else, the only responsible path is one rooted in clarity and alignment.

Because the worst-case scenario isn't a high bill. It's a great customer you can't afford to keep.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo