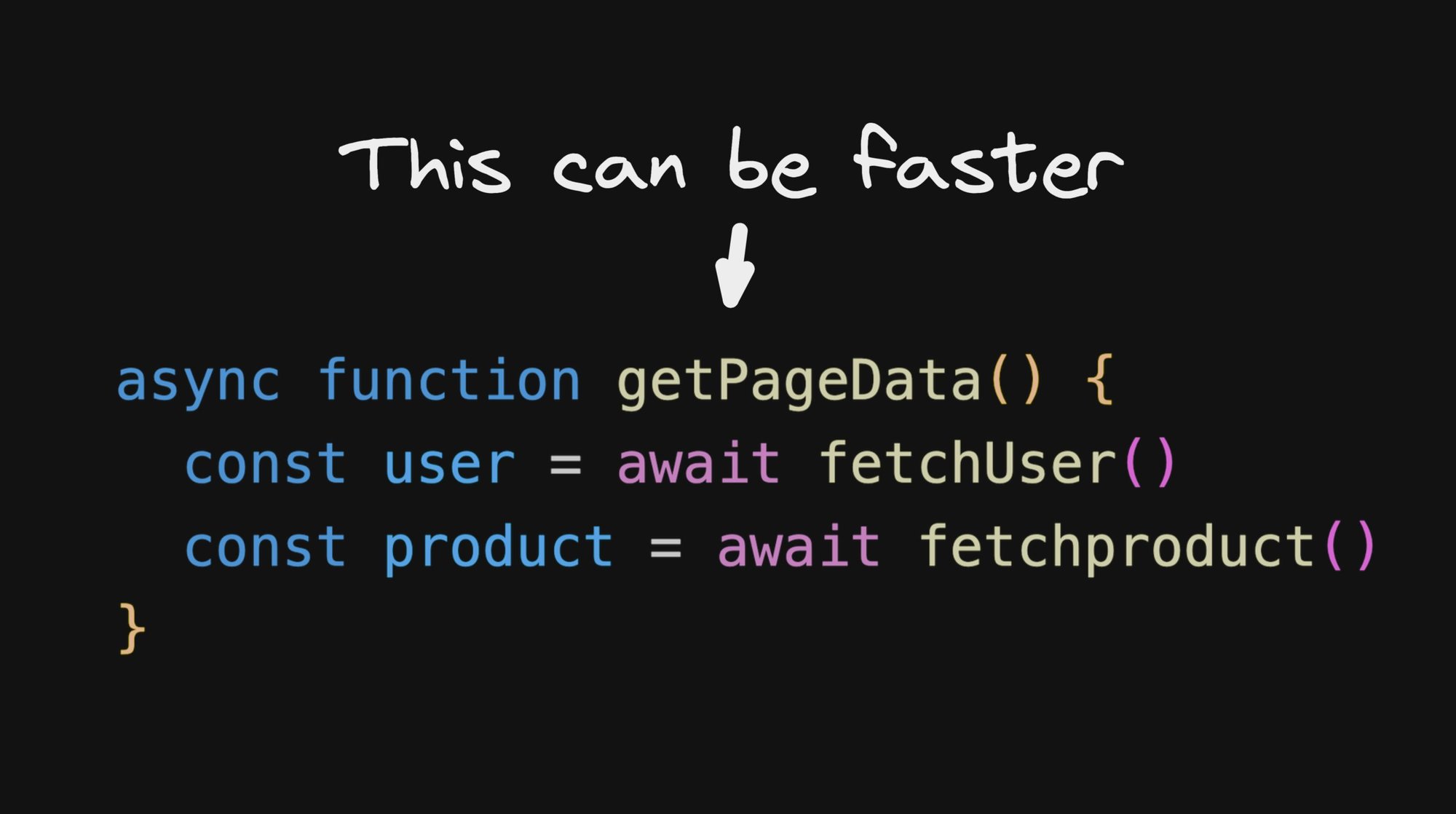

Did you know that we can optimize this function to complete in as little as half of the time?

async function getPageData() {

const user = await fetchUser()

const product = await fetchProduct()

}In this function, we’re awaiting a fetch for a user, and then a fetch for a product, sequentially.

But one doesn’t depend on the other, so we don’t have to wait for one to complete before we fire off the request for the next.

Instead, we could fire both requests together, and await both concurrently.

One way to do this is utilizing Promise.all, like so:

async function getPageData() {

const [user, product] = await Promise.all([

fetchUser(), fetchProduct()

])

}Nifty!

And now if we imagine that each of those requests took 1 second to respond each, whereas in our original function we would wait for both in a row totaling 2 seconds for our function to complete, in this new function we wait for both concurrently so our function completes in 1 second — half the time!

But… theres just one problem

First, we’re not handling errors at all here.

So you could say “sure, I’ll put this in a big ole try-catch block”.

async function getPageData() {

try {

const [user, product] = await Promise.all([

fetchUser(), fetchProduct()

])

} catch (err) {

// 🚩 this has a big problem...

}

}But this actually has a major issue.

Let's say fetchUser completes first with an error. That will trigger our catch block and then continue on with the function.

But here is the kicker - if fetchProducts then errors afterward, this will not trigger the catch block. That is because our function has already continued. The catch code has run, the function has completed — we’ve moved on.

So this will instead result in an unhandled promise rejection. Ack.

So if we have some kind of handling logic, that prompts the user or saves to an error logging service, like so:

// ...

} catch (err) {

handle(err)

}

// ...

function handle(err) {

alertToUser(err)

saveToLoggingService(err)

}We will sadly only be made aware of the first error. The second error will be lost in the ether - with no user feedback, not being captured in our error logs - it’s effectively invisible (besides a little noise in the browser console).

One solution to our above issue is to pass a function to .catch(), for instance like this:

function onReject(err) {

handle(err)

return err

}

async function getPageData() {

const [user, product] = await Promise.all([

fetchUser().catch(onReject), // ⬅️

fetchProduct().catch(onReject) // ⬅️

])

if (user instanceof Error) {

handle(user) // ✅

}

if (product instanceof Error) {

handle(product) // ✅

}

}In this case, if we get an error, we return handle the error and return it. So now our resulting user and product objects are either an Error, which we can check with instanceof, or otherwise our actual good result.

This ain't so bad, and solves our prior issues.

But, the main drawback here is we need to make sure we are always providing that .catch(onReject) , religiously, throughout our code. This is sadly quite easy to miss, and also not the easiest to write a bullet proof eslint rule for.

As a side note, it’s useful to keep in mind that we don’t always need to immediately await a promise after creating it. Another technique that we can use here that is virtually the same is this this:

async function getPageData() {

// Fire both requests together

const userPromise = fetchUser().catch(onReject)

const productPromise = fetchProduct().catch(onReject)

// Await together

const user = await userPromise

const product = await productPromise

// Handle individually

if (user instanceof Error) {

handle(user)

}

if (product instanceof Error) {

handle(product)

}

}Because we fire off each fetch before we await for either one, this version has the same performance benefits as our examples above that use Promise.all .

Additionally, in this format, we can safely use try/catch if we like without the issues we had previously:

async function getPageData() {

const userPromise = fetchUser().catch(onReject)

const productPromise = fetchProduct().catch(onReject)

// Try/catch each

try {

const user = await userPromise

} catch (err) {

handle(err)

}

try {

const product = await productPromise

} catch (err) {

handle(err)

}

}Between these three, I personally like the Promise.all version, as it feels more idiomatic to say “wait for these two things together”. But that said, I think this just comes down to personal preference

Another solution, that is built into JavaScript, is to use Promise.allSettled.

With Promise.allSettled, instead of getting the user and product back directly, we get a result object that contains the value or error of each promise result.

async function getPageData() {

const [userResult, productResult] = await Promise.allSettled([

fetchUser(), fetchProduct()

])

}The result objects have 3 properties:

status- Either"fulfilled"or"rejected"value- Only present ifstatusis"fulfilled". The value that the promise was fulfilled withreason- Only present ifstatusis"rejected". The reason that the promise was rejected with.

So we can now read what the status of each promise was, and process each error individually, without losing any of this critical information:

async function getPageData() {

// Fire and await together

const [userResult, productResult] = await Promise.allSettled([

fetchUser(), fetchProduct()

])

// Process user

if (userResult.status === 'rejected') {

const err = userResult.reason

handle(err)

} else {

const user = userResult.value

}

// Process product

if (productResult.status === 'rejected') {

const err = productResult.reason

handle(err)

} else {

const product = productResult.value

}

}But, that is kind of a lot of boilerplate. So let’s abstract this down:

async function getPageData() {

const results = await Promise.allSettled([

fetchUser(), fetchProduct()

])

// Nicer on the eyes

const [user, product] = handleResults(results)

}And we can implement a simple handleResults function like so:

// Generic function to throw if any errors occured, or return the responses

// if no errors happened

function handleResults(results) {

const errors = results

.filter(result => result.status === 'rejected')

.map(result => result.reason)

if (errors.length) {

// Aggregate all errors into one

throw new AggregateError(errors)

}

return results.map(result => result.value)

}We are able to use a nifty trick here, the AggergateError class, to throw an error that may contain multiple inside. This way, when caught we get a single error with all details, via the .errors property on an AggregateError that includes every error included:

async function getPageData() {

const results = await Promise.allSettled([

fetchUser(), fetchProduct()

])

try {

const [user, product] = handleResults(results)

} catch (err) {

for (const error of err.errors) {

handle(error)

}

}

}And hey, this is pretty simple, nice and generic. I like it.

So that settles awaiting multiple promises concurrently where we need the result of both. But there are two additional Promise concurrency methods we get in JavaScript that can be useful to be aware of.

Don’t miss the warnings on these though (below), as while they are interesting and occasionally useful, I would use them with caution.

One method we get is Promise.race, which takes an iterable of promises and returns a single Promise that settles with the eventual state of the first promise that settles.

For example, we could implement a simple timeout like so:

// Race to see which Promise completes first

const racePromise = Promise.race([

doSomethignSlow(),

new Promise((resolve, reject) =>

// Time out after 5 seconds

setTimeout(() => reject(new Error('Timeout')), 5000)

)

])

try {

const result = await racePromise

} catch (err) {

// Timed out!

}⚠️ Note: this isn’t always ideal, as generally if you have a timeout you should cancel the outstanding pending task if at all possible.

So for example if doSomethingSlow() fetched data, we’d generally want to abort the fetch upon a timeout using an AbortController instead of just rejecting the race promise and moving about our business, letting the request continue to hang for no good reason.

But this is just an example to demonstrate the basic concept, so hopefully you get the idea.

Also, like always, it’s still best to handle all errors of all promises as well:

const racePromise = Promise.race([

doSomethignSlow().catch(onReject), // ✅

// ...The final method we get is Promise.any, which is similar to Promise.race, but instead waits for either promise to resolve successfully, and only rejects if both promises reject.

This can be useful, for example, in situations where it can be unpredictable which location for a piece of data is faster:

const anyPromise = Promise.any([

getSomethingFromPlaceA(), getSomethingFromPlaceB()

])

try {

const winner = await anyPromise

} catch (err) {

// Darn, both failed

}Similar to the above, an ideal solution here would abort the slower request once the faster one completes. But again - these are just simple contrived examples to demonstrate the basics.

⚠️ Note: we don’t always want to hammer on multiple data sources concurrently just because we can (e.g. just because it might save the user a fraction of a second). So use this wisely, and sparingly.

Oh yeah, and like always, we really don’t want unhandled promise rejections, so you know what to do:

const anyPromise = Promise.any([

getSomethingFromPlaceA().catch(onReject), // ✅

getSomethingFromPlaceB().catch(onReject) // ✅

])Before we get too excited and concurrentify all of our code, let’s not forget three things.

Concurrency is awesome, but excessive parallelization can lead to network thrashing, disk thrashing, or other issues. Use good judgment and avoid craziness like this:

// ❌ please don't

const results = await Promise.allSettled([

fetchUser(),

fetchProduct(),

getAnotherThing(),

andAnotherThing(),

andYetAnotherThing(),

andMoreThings(),

areYouEvenStillReading(),

thisIsExcessive(),

justPleaseDont()

])Just to be sure we avoid confusion - I want to point out that it’s important to be aware that when we are talking about concurrency here, we are referring to awaiting promises concurrently, not executing code concurrently.

JavaScript is and always has been a single threaded language, so let’s not forget that.

Sometimes sequential code is simply easier to reason about and manage.

// It sure is simple and easy to read, isn't it

async function getPageData() {

const user = await fetchUser()

const product = await fetchProduct()

}Avoid premature optimization and be sure you have a good reason before adding more complexity. Being fast is great, but consider if it’s even needed before blindly concurrentifying everything in your code.

Use tools to solve problems, not create them.

Promises in JavaScript are powerful, and even more so when you realize the concurrent possibilities they unlock as well.

While you should use caution before going overly heavy with turning sequential async/await to concurrent awaits, JavaScript has a number of useful tools built in to help you speed things up when you need that are worth knowing.

Tip: Visit our JavaScript hub to learn more.

Hi! I'm Steve, CEO of Builder.io.

We make a way to drag + drop with your components to create pages and other CMS content on your site or app, visually.

You may find it interesting or useful:

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo